Artificial intelligence (AI) is rapidly reshaping many aspects of our lives, from how we search for information to how we receive healthcare. One of the most talked-about developments in the mental health world is the emergence of AI therapy—virtual tools, chatbots, and platforms designed to provide therapeutic support through artificial intelligence.

On the surface, AI therapy may seem like an efficient, accessible, scalable, and even compassionate solution to the growing global mental health crisis. But as with any new technology, especially one dealing with human emotion and vulnerability, there are important ethical considerations and risks to explore.

Like many in our field of counselling and psychotherapy, our team at East Vancouver Counselling have been watching with interest as we see more and more automated therapy services offered. A recent article in Time Magazine, “A Psychiatrist Posed As a Teen With Therapy Chatbots. The Conversations Were Alarming” , is worth looking at for more nuanced understanding of some of the potential concerns. In this article, we explore some of the benefits and concerns about AI therapy and the implications of it for you as a client

The Promise of AI Therapy

AI therapy tools—such as Woebot, Wysa, and others—offer several clear benefits:

- Accessibility: AI tools can provide immediate, 24/7 support, especially for individuals in rural or underserved areas where human therapists may not be available.

- Affordability: AI-based apps are often low-cost or free, making mental health support more financially accessible.

- Anonymity: For people who feel stigma or discomfort about reaching out to a human therapist, AI tools can offer a lower-pressure entry point into care.

- Scalability: AI tools can potentially reach millions of users at once, a scale human therapists simply cannot match.

- Effective diagnostics: AI has the capacity to identify subtle patterns and nuanced expressions of mental health distress. Therapists working with AI tools may be able to more quickly and effectively develop treatment plans and implement strategic interventions.

Research has shown that AI mental health apps can improve outcomes for some users with certain types of mild to moderate symptoms. For instance, a randomized controlled trial of Woebot—an AI-driven CBT chatbot—found a significant reduction in depression and anxiety symptoms after just two weeks of use (Fitzpatrick et al., 2017, JMIR Mental Health).

Similarly, Wysa, another AI mental health app, demonstrated effectiveness in reducing symptoms of stress and anxiety in a workplace setting (Inkster et al., 2018, Frontiers in Psychology).

There are also promising features being explored to help fine tune and improve early diagnosis of mental health conditions. Under the guidance of a qualified mental health professional, AI can help identify subtle patterns and escalation of symptoms, and offer evidence-based approaches to improve mental health outcomes.

The Ethical Risks and Challenges

Despite these promising aspects, AI therapy raises significant ethical concerns—many of which cannot be ignored.

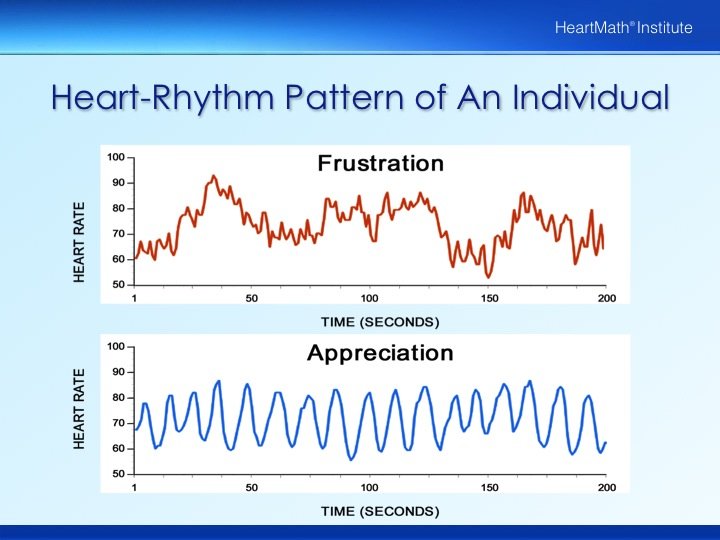

1. Lack of Emotional Understanding

AI lacks empathy and lived experience. It can simulate empathy, but it doesn’t understand or feel it. Studies caution that while AI can assist in therapeutic tasks, it cannot form a genuine therapeutic alliance—something proven critical for lasting change (Norcross & Lambert, 2018, Psychotherapy). Human beings are geared to respond to authentic empathy, and our neurobiology is oriented to clue in to very nuanced features to seek safety and connection as part of our healing process. Mirror neurons (“empathy neurons”) pick up on subtle cues of others’ expressions and help us to connect. While some questions can be answered with AI and it can seem authentic on some levels, there is a missing element of human connection with AI therapy.

2. Privacy and Data Security Risks

A 2023 report by Mozilla found that many mental health apps, including those using AI, had poor data privacy practices, sharing or selling sensitive data without informed consent (“Privacy Not Included”, Mozilla Foundation, 2023). As many as 74% of AI therapy apps have been found to be high risk for privacy concerns, as well as 15% being listed as being a “Critical risk” for your privacy and security.

3. Accountability and Transparency Issues

Currently, no global regulatory framework ensures ethical oversight of AI therapy tools. If an AI app gives harmful advice, there’s often no clinician or governing body accountable. This was highlighted by a 2022 study calling for urgent AI regulation in mental healthcare (Luxton, 2022, The Journal of Technology in Behavioral Science). There is no framework for gatekeeping for apps that get information wrong, and the model is based in a corporate model that limits recourse if someone is harmed by information they gather through an AI bot.

4. Bias and Inequality

AI reflects the biases of the data it’s trained on. This can lead to poorer outcomes for racialized, queer, or neurodivergent users. A 2021 study found that AI models in healthcare disproportionately misdiagnosed symptoms in women and people of color (Obermeyer et al., 2019, Science).

5. Potential for Misguided Care

As outlined in the article linked above from Time, AI has been built with a sycophantic bias. This means that the information will be geared toward pleasing, reassuring, and calming you. However, humans have a built in tendency toward confirmation bias which can, when we experience support for false or misleading beliefs or patterns, reinforce negative or challenging symptoms. AI is not geared to challenge or even to identify psychopathy, and could be seen as harmful with certain types of mental health conditions that may not yet be diagnosed.

Human Connection Still Matters

Therapeutic healing often stems from a safe, attuned relationship with a real person. While AI can offer a technical guide, or provide CBT exercises, it lacks the relational depth, intuition, and contextual sensitivity of a trained therapist. The “therapeutic alliance”—the relationship between client and therapist—is consistently the strongest predictor of treatment success (Horvath et al., 2011, Psychotherapy).

That said, blended care models are showing promise. There are aspects of providing therapy that are about psychoeducation, developing technical skillsets, and recognizing patterns. It can be helpful to develop Combining digital tools with in-person or online therapy can enhance engagement and skill retention (Torous et al., 2020, The Lancet Psychiatry). We believe that AI has the potential to be a helpful supplement – not a substitute – for good

Moving Forward with Caution and Care

At East Vancouver Counselling, we value innovation and evidence-based services, but we are also committed to ethical, trauma-informed, and human-centred care. As AI therapy continues to evolve, we encourage clients and clinicians alike to:

- Ask critical questions about the tools they use, and bring your AI chats to your therapist for a second opinion.

- Understand the limits of AI and prioritize safety. Your digital privacy matters now more than ever.

- Look at the contracts and agreements for AI tools and bot therapy to make sure that your rights are not being compromised, and that there is ethical oversight of the process you’ll be trusting your mental health to.

- Choose technologies that are transparent, research-backed, and designed with care.

- Understand that some mental health concerns, including attachment-based issues like loneliness, abandonment, and relationship issues, as well as some more complex psychopathology, may still be best supported by a qualified, professional, real world counsellor with whom you feel you have a good fit.

While AI tools AI are playing a very active role in expanding access to mental health care, at this time it is recommended that AI bot therapists should not replace the unique healing power of human connection, empathy, and relationship.

Need Support?

Our team of licensed and registered clinical counsellors and psychotherapists offer trauma-informed, personalized therapy both in-person and online. While AI therapists may be available and helpful for those late night panic attacks, we will always want to connect with you asap afterward to help you debrief and work through your experience. We are real, live humans and we have the capacity to offer a wide range of services from low cost options to highly specialized and experienced assistance. We all are committed to offering caring and ethical support, and we are also all bound to a code of ethics and shared standards of practice.

If you’re curious about how digital tools might complement your therapy, we’re happy to have that conversation with you.

Sources:

Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science.

Clark, A. (2025, June 12). A psychiatrist posed as a teen with therapy chatbots. The conversations were alarming. TIME. https://time.com/7291048/ai-chatbot-therapy-kids/

Fitzpatrick, K. K., Darcy, A., & Vierhile, M. (2017). Delivering Cognitive Behavior Therapy to Young Adults With Symptoms of Depression and Anxiety Using a Fully Automated Conversational Agent (Woebot): A Randomized Controlled Trial. JMIR Mental Health.

Horvath, A. O., Del Re, A. C., Flückiger, C., & Symonds, D. (2011). Alliance in individual psychotherapy. Psychotherapy.

Inkster, B., Sarda, S., & Subramanian, V. (2018). An Empathy-Driven, Conversational Artificial Intelligence Agent (Wysa) for Digital Mental Well-Being: Real-World Data Evaluation. Frontiers in Psychology.

Luxton, D. D. (2022). Ethical and Legal Challenges in the Use of Artificial Intelligence in Mental Health Care. The Journal of Technology in Behavioral Science.

Norcross, J. C., & Lambert, M. J. (2018). Psychotherapy relationships that work III. Oxford University Press.

Mozilla Foundation. (2023). Privacy Not Included – Mental Health Apps Report.